A Framework Inspired by Planetary Limits

When Johan Rockström and his team introduced the concept of Planetary Boundaries in 2009, they offered a new way to think about sustainability: not by what we intend to do, but by what we must not exceed. Crossing certain thresholds—biodiversity loss, climate change, freshwater use—could trigger irreversible environmental shifts.

Lately, at Thaddeus Martin Consulting (TMC), we’ve been exploring whether we could apply the same thinking to the social and ethical dimensions of Artificial Intelligence.

Could we define a “safe operating space” for AI—zones where innovation can flourish without breaching fundamental social, ethical, or environmental norms?

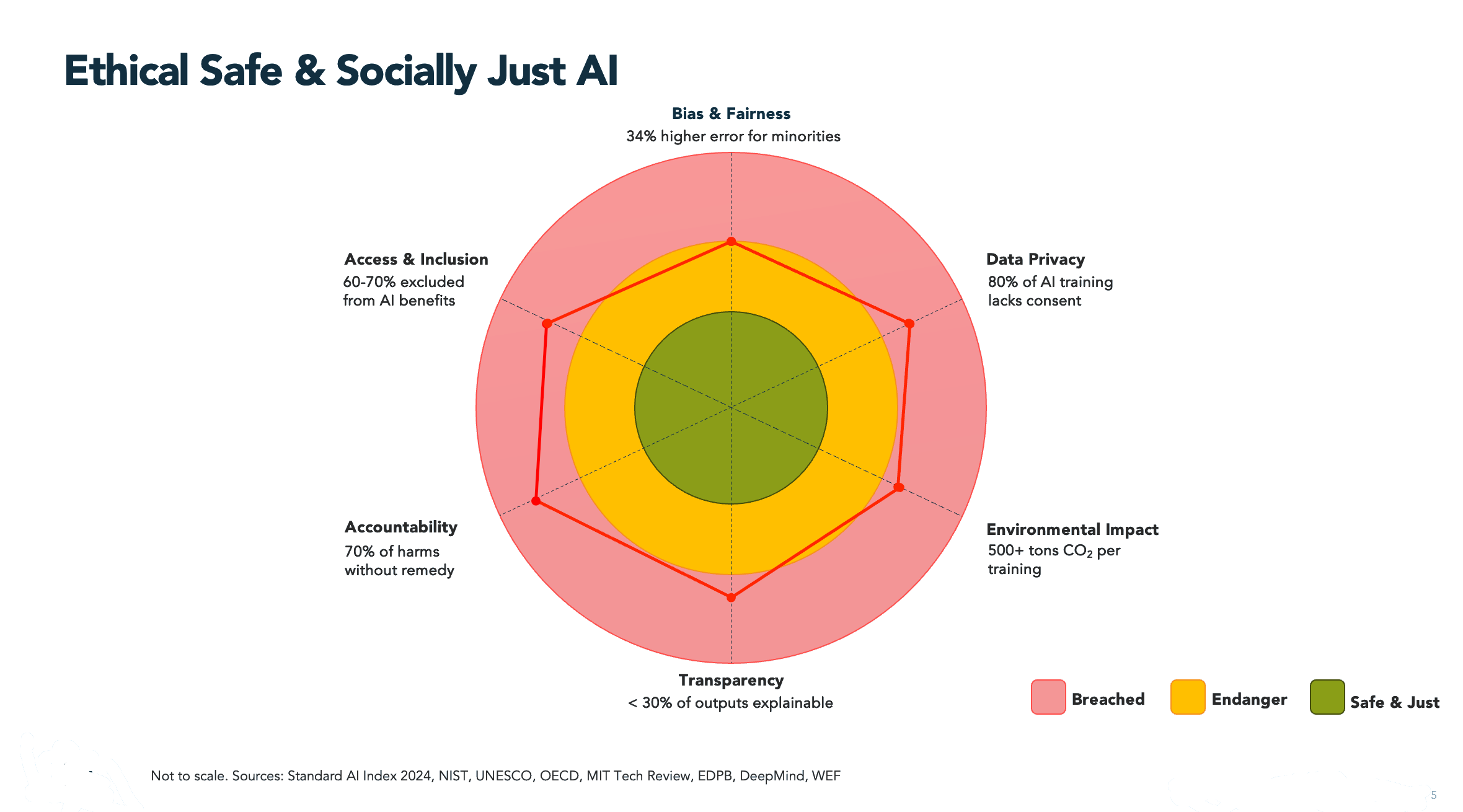

As part of our work in AI ethics consulting and responsible technology governance, we developed the radar chart below. It maps AI’s current performance across six critical domains: Bias & Fairness, Data Privacy, Environmental Impact, Transparency, Accountability, and Access & Inclusion.

It’s not a perfect model. But it’s a starting point for a much-needed AI risk framework—a way to move ethical conversations from aspiration to measurable governance.

Why These Six Pillars?

At TMC, we built this model through a careful review of globally recognised AI governance sources, including:

- Standard AI Index 2024 (Stanford University) – a benchmark for assessing AI system performance globally.

- UNESCO Recommendation on the Ethics of Artificial Intelligence – grounding AI ethics within human rights, inclusion, and social justice.

- OECD AI Principles – setting internationally accepted ethical standards for AI development and use.

- NIST AI Risk Management Framework (AI RMF 1.0) – providing operational risk assessment and management tools.

- MIT Technology Review reporting on AI ethics and DeepMind’s research on AI sustainability and governance – providing empirical insights into bias, environmental impacts, and model transparency.

- European Data Protection Board (EDPB) guidance on AI and data privacy under GDPR – addressing lawful data sourcing and privacy rights for AI systems.

- World Economic Forum (WEF) reports on equitable access and responsible AI deployment – highlighting the systemic social impacts of AI.

Our approach deliberately reflects a cross-section of regulatory, academic, scientific, and operational perspectives, aligned to TMC’s broader philosophy: governance must be credible, diverse, and implementable.

The Current Reality: Breach Zones Everywhere

Through the lens of our ethical boundaries framework, today’s AI ecosystem shows significant breaches:

- Bias & Fairness: Error rates up to 34% higher for minority groups.

- Data Privacy: 80% of datasets lack lawful consent.

- Environmental Impact: Training large models releases 500+ tons of CO₂.

- Transparency: Only 30% of AI outputs are explainable.

- Accountability: 70% of AI-driven harms go unremedied.

- Access & Inclusion: 60–70% of the global population is excluded from AI benefits.

For anyone working in AI risk management or sustainable AI development, the conclusion is clear: without structured thresholds, we risk embedding systemic failures into the foundations of our digital future.

Defining a Safe and Just AI Operating Space

TMC proposes a measurable and practical set of responsible AI benchmarks:

| Domain | Safe Zone Threshold |

|---|---|

| Bias & Fairness | <5% disparity across demographic groups |

| Data Privacy | 95-100% lawful consent on training data |

| Environmental Impact | <50 tons CO per model (or carbon-neutral offset) |

| Transparency | >50% explainability; >80% for critical systems |

| Accountability | 100% harms attributable and legally remediable |

| Access & Inclusion | >90% global population AI benefit inclusion |

These thresholds aren’t theoretical. They offer a practical roadmap for ethical AI system design, impact investing, regulatory compliance, and governance strategies.

Why Planetary Boundaries Matter for AI Governance

The Planetary Boundaries model was revolutionary because it:

- Set hard thresholds rather than soft goals.

- Enabled system-level monitoring of ecological health.

- Created a shared understanding of unacceptable risk.

Similarly, in the context of AI ethics and compliance, adopting boundary-based thinking is essential. Governance must be predictive, preventative, and measurable—not just reactive.

Without ethical boundaries, trust in AI will continue to erode.

Without responsible governance, AI risks exacerbating existing inequities and creating new systemic harms.

Putting it together

So putting it together, this is what it looks like:

Final Reflections

At Thaddeus Martin Consulting, we work with investors, founders, and policymakers to design responsible AI governance frameworks that align innovation with ethical resilience.

This diagnostic tool is part of our broader commitment to help organisations:

- Build AI due diligence protocols aligned with best practices.

- Integrate ESG considerations into AI-driven investment strategies.

- Prepare for evolving AI regulatory frameworks across jurisdictions.

As AI becomes central to critical infrastructure, finance, healthcare, and climate solutions, the need for actionable, risk-based ethical standards will only grow.

If you are investing in, building, or regulating AI systems, we would love to hear your perspective—and collaborate on shaping a future where technology operates safely within human and planetary boundaries.

Sources:

- Standard AI Index 2024 (Stanford University)

- UNESCO Recommendation on AI Ethics

- OECD AI Principles

- NIST AI RMF 1.0

- MIT Technology Review and DeepMind research on AI ethics and sustainability

- European Data Protection Board (EDPB) guidance on AI and GDPR compliance

- World Economic Forum (WEF) Reports on Responsible AI